As tech teams grow, every executive would like to know whether they are still (high-) performing. McKinsey, Gartner, and some industry luminaries all came up with their own answers, but as every company is different, we felt there was always something missing in what was out there.

We pondered a lot about developer productivity and performance. We have built our own framework which has shown good results so far. In this post, I will summarise the core pillars we rely on for measuring and driving engineering performance. If you are on a similar path of hyper-scale, this could serve as a good starting point for you to adapt and improve.

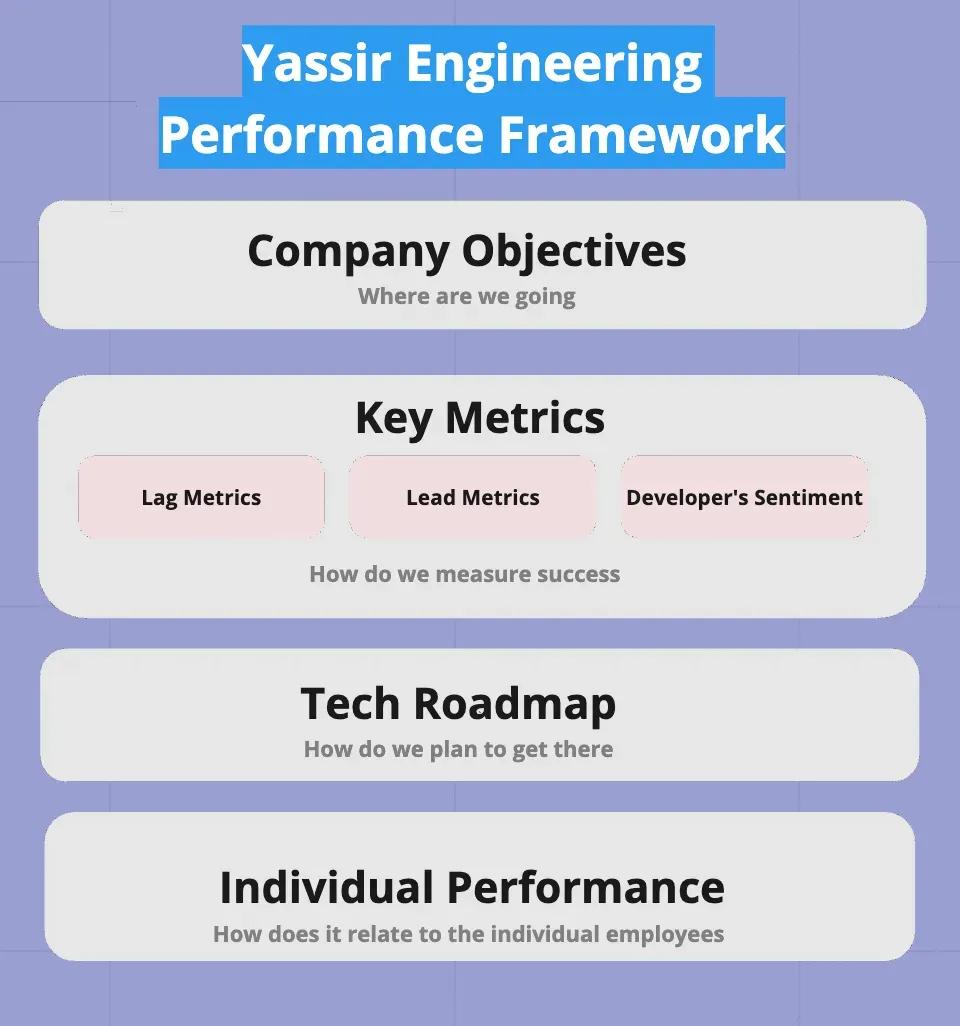

Our performance framework relies on 4 pillars:

- Company OKR to set the direction and provide alignment

We use the OKR Framework to define and communicate what the teams need to achieve during a set period. Objectives are shared amongst product and tech teams. Each team inherits a set of ambitious OKRs that include business outcomes as well as engineering performance goals. These objectives are also co-created with the teams.

The most important engineering objectives that we share are:

- Velocity: how fast we can deliver stuff

- Quality & Reliability: how stable and defect-free our services are

OKRs are a great way to ensure smooth execution and build a culture of accountability. The co-creation elements help strengthen ownership. The shared nature of the OKRs provides the required focus.

We tend to overshare and over-communicate about our OKRs internally. Reminding the teams on every occasion about the objectives and overall progress.

- Key metrics and targets to measure progress

Once you have your objectives, you need to define the target key results to achieve. We figured there are three categories of metrics that are important for us:

a) Lag metrics to define what success looks like

In our case, each objective described above will come with 3–4 key results. We rely on DORA Metrics to set the targets as well as a home-grown “reliability score” and Tech Debt Index.

From one quarter to another, we might include additional key results that are usually aligned with some target benchmarks. These “Lag Metrics” (i.e. metrics that show the outcomes) are great to align teams and offer them a clear definition of success they can strive to reach.

b) Lead metrics to foster the conditions of success

We noticed providing a clear idea of where we want to go is not enough to achieve high performance. We need, at the same time, to enable the conditions and environment for high performance. We achieve this by tracking “lead metrics” (i.e. input-based metrics that show what is being done). Here, our focus is on two areas:

- Team Composition: we have strong views on what a high-performing team looks like, from the team size (smaller teams tend to perform better), the skills it contains (cross-functional teams tend to perform better), or the level of seniority. We call this the Yassir SAUCE. We track how each team fares on this team composition index and strive to get all teams to perfection.

- Capacity Allocation: we monitor our engineering efforts spent and ensure that a certain level is dedicated to maintenance and tech improvement (vs building new capabilities), sprint over sprint.

c) Sentiment Analysis for Reality Check

We can spend a lot of time in Excel sheets tweaking the best formula for high-performing teams, but nothing replaces the unfiltered feedback of the front-line teams to inform on how well we are doing. A solid performance framework should also make space for the voice of the engineers.

We run several surveys throughout the year to capture this voice. Employee NPS score is a critical metric for us. We also measure psychological safety, developer satisfaction, and our agile maturity regularly. This feedback helps us calibrate our interventions and identify gaps that would otherwise go unnoticed.

- ** Tech Roadmap to ensure execution**

Once we have goals and targets, we can layer in initiatives that enable us to move the needle. Initiatives are organised into a technical roadmap that lives alongside our product roadmap and includes activities such as tech debt management, platform improvements, etc. We also hold demo days and architecture reviews to check on progress and offer a forum for discussion and feedback. We tend to invest 20–30% of our capacity into the tech roadmap each quarter.

Having a plan and a cadence to review progress helps us strengthen our execution muscle and hold a high standard of accountability.

- Continuous monitoring of individual performance to foster growth & development

The last bit of the framework is about individual performance. Performance reviews are a powerful tool to shape certain behaviors and identify and grow your top performers. You will need a standardized career framework that clarifies the expectations for each role and what is needed to move to the next level. At Yassir, every engineer is evaluated against 4 broad behaviors that reflect our performance expectations as well as our values. Crucially, we decouple individual performance from OKR execution.

We recommend running bi-annual performance reviews instead of just once a year. That helps capture a more accurate picture. In fact, performance reviews should not be limited to once or twice a year, we encourage all our managers to run 1:1 meetings with their subordinates to provide/receive feedback constantly. We find that type of direct communication to be the number #1 productivity booster.

Wrapping up

To summarise, hundreds of models for measuring engineering performance exist. Choose the one that suits your environment, as no two companies are similar. Ours, built through trial and error and observing thousands of developer hours, relies on four key pillars:

- Company objectives: shared across product and tech teams that cover velocity and quality

- **Key metrics **and ambitious targets that inform about outcomes, key activities, and developer sentiment progress

- Tech roadmap: that highlights what needs to be done by whom

- Individual performance: that links company performance objectives and expectations to individual career goals and growth plans

This framework has been instrumental in overhauling Yassir tech capabilities. We’ve seen substantial improvement across our lead time for change (-30%), deployment frequency (+100%), and Mean time to Restore (-90%) as well as developer satisfaction (as measured by eNPS).

The key is to combine the right mix of metrics (aligned with your business goals) with a solid discipline of execution and excellence. In doing so, one should not rely on metrics solely or too eagerly as it will most certainly fail. Neither should you overlook basic HR hygiene (competitive pay, work conditions, etc) or your team’s motivation and well-being. It’s a system and for it to work, you will need to find the right balance.

Ultimately, the most important thing is to start somewhere. As long as your high-performance expectations are clear and shared by everyone, you are on the right path. Perhaps, one of the biggest risks with such an endeavor is to over-engineer it and, in doing so, get distracted and lose focus over the business. High performance makes sense, if and only if it enables us to serve our customers better.